The steps involved in the process of developing research questions and study objectives for conducting observational comparative effectiveness research (CER) are described in this chapter. It is important to begin with identifying decisions under consideration, determining who the decisionmakers and stakeholders in the specific area of research under study are, and understanding the context in which decisions are being made. Synthesizing the current knowledge base and identifying evidence gaps is the next important step in the process, followed by conceptualizing the research problem, which includes developing questions that address the gaps in existing evidence. Understanding the stage of knowledge that the study is designed to address will come from developing these initial questions. Identifying which questions are critical to reduce decisional uncertainty and minimize gaps in the current knowledge base is an important part of developing a successful framework. In particular, it is beneficial to look at what study populations, interventions, comparisons, outcomes, timeframe, and settings (PICOTS framework) are most important to decisionmakers in weighing the balance of harms and benefits of action. Some research questions are easier to operationalize than others, and study limitations should be recognized and accepted from an early stage. The level of new scientific evidence that is required by the decisionmaker to make a decision or to take action must be recognized. Lastly, the magnitude of effect must be specified. This can mean defining what is a clinically meaningful difference in the study endpoints from the perspective of the decisionmaker and/or defining what is a meaningful difference from the patient's perspective.

Overview

The foundation for designing a new research protocol is the study's objectives and the questions that will be investigated through its implementation. All aspects of study design and analysis are based on the objectives and questions articulated in a study's protocol. Consequently, it is exceedingly important that a study's objectives and questions be formulated meticulously and written precisely in order for the research to be successful in generating new knowledge that can be used to inform health care decisions and actions.

An important aspect of CER1 and other forms of translational research is the potential for early involvement and inclusion of patients and other stakeholders to collaborate with researchers in identifying study objectives, key questions, major study endpoints, and the evidentiary standards that are needed to inform decisionmaking. The involvement of stakeholders in formulating the research questions increases the applicability of the study to the end-users and facilitates appropriate translation of the results into health care practice and use by patient communities. While stakeholders may be defined in multiple ways, for the purposes of this User's Guide, a broad definition will be used. Hence, stakeholders are defined as individuals or organizations that use scientific evidence for decisionmaking and therefore have an interest in the results of new research. Implicit in this definition of stakeholders is the importance for stakeholders to understand the scientific process, including considerations of bioethics and the limitations of research, particularly with regard to studies involving human subjects. Ideally, stakeholders also should express commitment to using objective scientific evidence to inform their decisionmaking and recognize that disregarding sound scientific methods often will undermine decisionmaking. For stakeholder organizations, it is also advantageous if the organization has well-established processes for transparently reviewing and incorporating research findings into decisions as well as organized channels for disseminating research results.

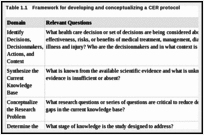

There are at least seven essential steps in the conceptualization and development of a research question or set of questions for an observational CER protocol. These steps are presented as a general framework in Table 1.1 below and elaborated upon in the subsequent sections of this chapter. The framework is based on the principle that researchers and stakeholders will work together to objectively lay out the research problems, research questions, study objectives, and key parameters for which scientific evidence is needed to inform decisionmaking or health care actions. The intent of this framework is to facilitate communication between researchers and stakeholders in conceptualizing the research problem and the design of a study (or a program of research involving a series of studies) in order to maximize the potential that new knowledge will be created from the research with results that can inform decisionmaking. To do this, research results must be relevant, applicable, unbiased and sufficient to meet the evidentiary threshold for decisionmaking or action by stakeholders. In order for the results to be valid and credible, all persons involved must be committed to protecting the integrity of the research from bias and conflicts of interest. Most importantly, the study must be designed to protect the rights, welfare, and well-being of subjects involved in the research.

Framework for developing and conceptualizing a CER protocol.

Identifying Decisions, Decisionmakers, Actions, and Context

In order for research findings to be useful for decisionmaking, the study protocol should clearly articulate the decisions or actions for which stakeholders seek new scientific evidence. While only some studies may be sufficiently robust for making decisions or taking action, statements that describe the stakeholders' decisions will help those who read the protocol understand the rationale for the study and its potential for informing decisions or for translating the findings into changes in health care practices. This information also improves the ability of protocol readers to understand the purpose of the study so they can critically review its design and provide recommendations for ways it may be potentially improved. If stakeholders have a need to make decisions within a critical time frame for regulatory, ethical, or other reasons, this interval should be expressed to researchers and described in the protocol. In some cases, the time frame for decisionmaking may influence the choice of outcomes that can be studied and the study designs that can be used. For some stakeholders' questions, research and decisionmaking may need to be divided into stages, since it may take years for outcomes with long lag times to occur, and research findings will be delayed until they do.

In writing this section of the protocol, investigators should ask stakeholders to describe the context in which the decision will be made or actions will be taken. This context includes the background and rationale for the decision, key areas of uncertainty and controversies surrounding the decision, ways scientific evidence will be used to inform the decision, the process stakeholders will use to reach decisions based on scientific evidence, and a description of the key stakeholders who will use or potentially be affected by the decision. By explaining these contextual factors that surround the decision, investigators will be able to work with stakeholders to determine the study objectives and other major parameters of the study. This work also provides the opportunity to discuss how the tools of science can be applied to generate new evidence for informing stakeholder decisions and what limits may exist in those tools. In addition, this initial step begins to clarify the number of analyses necessary to generate the evidence that stakeholders need to make a decision or take other actions with sufficient certainty about the outcomes of interest. Finally, the contextual information facilitates advance planning and discussions by researchers and stakeholders about approaches to translation and implementation of the study findings once the research is completed.

Synthesizing the Current Knowledge Base

In designing a new study, investigators should conduct a comprehensive review of the literature, critically appraise published studies, and synthesize what is known related to the research objectives. Specifically, investigators should summarize in the protocol what is known about the efficacy, effectiveness, and safety of the interventions and about the outcomes being studied. Furthermore, investigators should discuss measures used in prior research and whether these measures have changed over time. These descriptions will provide background on the knowledge base for the current protocol. It is equally important to identify which elements of the research problem are unknown because evidence is absent, insufficient, or conflicting.

For some research problems, systematic reviews of the literature may be available and can be useful resources to guide the study design. The AHRQ Evidence-based Practice Centers2 and the Cochrane Collaboration3 are examples of established programs that conduct thorough systematic reviews, technology assessments, and specialized comparative effectiveness reviews using standardized methods. When available, systematic reviews and technology assessments should be consulted as resources for investigators to assess the current knowledge base when designing new studies and working with stakeholders.

When reviewing the literature, investigators and stakeholders should identify the most relevant studies and guidelines about the interventions that will be studied. This will allow readers to understand how new research will add to the existing knowledge base. If guidelines are a source of information, then investigators should examine whether these guidelines have been updated to incorporate recent literature. In addition, investigators should assess the health sciences literature to determine what is known about expected effects of the interventions based on current understanding of the pathophysiology of the target condition. Furthermore, clinical experts should be consulted to help identify gaps in current knowledge based on their expertise and interactions with patients. Relevant questions to ask to assess the current knowledge base for development of an observational CER study protocol are:

What are the most relevant studies and guidelines about the interventions, and why are these studies relevant to the protocol (e.g., because of the study findings, time period conducted, populations studied, etc.)?

Are there differences in recommendations from clinical guidelines that would indicate clinical equipoise?

What else is known about the expected effects of the interventions based on current understanding of the pathophysiology of the targeted condition?

What do clinical experts say about gaps in current knowledge?

Conceptualizing the Research Problem

In designing studies for addressing stakeholder questions, investigators should engage multiple stakeholders in discussions about how the research problem is conceptualized from the stakeholders' perspectives. These discussions will aid in designing a study that can be used to inform decisionmaking. Together, investigators and stakeholders should work collaboratively to determine the major objectives of the study based on the health care decisions facing stakeholders. As pointed out by Heckman,4 research objectives should be formalized outside considerations of available data and the inferences that can be made from various statistical estimation approaches. Doing so will allow the study objectives to be determined by stakeholder needs rather than the availability of existing data. A thorough discussion of these considerations is beyond the scope of this chapter, but some important considerations are summarized in supplement 1 of this User's Guide.

In order to conceptualize the problem, stakeholders and other experts should be asked to describe the potential relationships between the intervention and important health outcomes. This description will help researchers develop preliminary hypotheses about the stated relationships. Likewise, stakeholders, researchers, and other experts should be asked to enumerate all major assumptions that affect the conceptualization of the research problem, but will not be directly examined in the study. These assumptions should be described in the study protocol and in reporting final study results. By clearly stating the assumptions, protocol reviewers will be better able to assess how the assumptions may influence the study results.

Based on the conceptualization of the research problem, investigators and stakeholders should make use of applicable scientific theory in designing the study protocol and developing the analytic plan. Research that is designed using a validated theory has a higher potential to reach valid conclusions and improve the overall understanding of a phenomenon. In addition, theory will aid in the interpretation of the study findings, since these results can be put in context with the theory and with past research. Depending on the nature of the inquiry, theory from specific disciplines such as health behavior, sociology, or biology could be the basis for designing the study. In addition, the research team should work with stakeholders to develop a conceptual model or framework to guide the implementation of the study. The protocol should also contain one or more figures that summarize the conceptual model or framework as it applies to the study. These figures will allow readers to understand the theoretical or conceptual basis for the study and how the theory is operationalized for the specific study. The figures should diagram relationships between study variables and outcomes to help readers of the protocol visualize relationships that will be examined in the study.

For research questions about causal associations between exposures and outcomes, causal models such as directed acyclic graphs (DAGs) may be useful tools in designing the conceptual framework for the study and developing the analytic plan. The value of DAGs in the context of refining study questions is that they make assumptions explicit in ways that can clarify gaps in knowledge. Free software such as DAGitty is available for creating, editing, and analyzing causal models. A thorough discussion of DAGs is beyond the scope of this chapter, but more information about DAGs is available in supplement 2 of this User's Guide.

The following list of questions may be useful for defining and describing a study's conceptual framework in a CER protocol:

What are the main objectives of the study, as related to specific decisions to be made?

What are the major assumptions of decisionmakers, investigators, and other experts about the problem or phenomenon being studied?

What relationships, if any, do experts hypothesize exist between interventions and outcomes?

What conceptual model will guide the study design and interpretation?

- –

What is known about each element of the model?

- –

Can relationships be expressed by causal diagrams?

Determining the Stage of Knowledge Development for the Study Design

The scientific method is a process of observation and experimentation in order for the evidence base to be expanded as new knowledge is developed. Therefore, stakeholders and investigators should consider whether a program of research comprising a sequential or concurrent series of studies, rather than a single study, is needed to adequately make a decision. Staging the research into multiple studies and making interim decisions may improve the final decision and make judicious use of scarce research resources. In some cases, the results of preliminary studies, descriptive epidemiology, or pilot work may be helpful in making interim decisions and designing further research. Overall, a planned series of related studies or a program of research may be needed to adequately address stakeholders' decisions.

An example of a structured program of research is the four phases of clinical studies used by the Food and Drug Administration (FDA) to reach a decision about whether or not a new drug is safe and efficacious for market approval in the United States. Using this analogy, the final decision about whether a drug is efficacious and safe to be marketed for specific medical indications is based upon the accumulation of scientific evidence from a series of studies (i.e., not from any individual study), which are conducted in multiple sequential phases. The evidence generated in each phase is reviewed to make interim decisions about the safety and efficacy of a new pharmaceutical until ultimately all the evidence is reviewed to make a final decision about drug approval.

Under the FDA model for decisionmaking, initial research involves laboratory and animal tests. If the evidence generated in these studies indicates that the drug is active and not toxic, the sponsor submits an application to the FDA for an “investigational new drug.” If the FDA approves, human testing for safety and efficacy can begin. The first phase of human testing is usually conducted in a limited number of healthy volunteers (phase 1). If these trials show evidence that the product is safe in healthy volunteers, then the drug is further studied in a small number of volunteers who have the targeted condition (phase 2). If phase 2 studies show that the drug has a therapeutic effect and lacks significant adverse effects, trials with large numbers of people are conducted to determine the drug's safety and efficacy (phase 3). Following these trials, all relevant scientific studies are submitted to the FDA for a decision about whether the drug should be approved for marketing. If there are additional considerations like special safety issues, observational studies may be required to assess the safety of the drug in routine clinical care after the drug is approved for marketing (phase 4). Overall, the decisionmaking and research are staged so that the cumulative findings from all studies are used by the FDA to make interim decisions until the final decision is made about whether a medical product will be approved for marketing.

While most decisions about the comparative effectiveness of interventions will not need such extensive testing, it still may be prudent to stage research in a way that allows for interim decisions and sequentially more rigorous studies. On the other hand conditional approval or interim decisions may risk confusing patients and other stakeholders about the extent to which current evidence indicates that a treatment is effective and safe for all individuals with a health condition. For instance, under this staged approach new treatments could rapidly diffuse into a market even when there is limited evidence of long-term effectiveness and safety for all potential users. An illustrative example of this is the case of lung-volume reduction surgery, which was increasingly being used to treat severe emphysema despite limited evidence supporting its safety and efficacy until new research raised questions about the safety of the procedure.6

Below is one potential categorization for the stages of knowledge development as related to informing decisions about questions of comparative effectiveness:

Descriptive analysis

Hypothesis generation

Feasibility studies/proof of concept

Hypothesis supporting

Hypothesis testing

The first stages (i.e., descriptive analysis, hypothesis generation, and feasibility studies) are not mutually exclusive and usually are not intended to provide conclusive results for most decisions. Instead these stages provide preliminary evidence or feasibility testing before larger, more resource-intensive studies are launched. Results from these categories of studies may allow for interim decisionmaking (e.g., conditional approval for reimbursement of a treatment while further research is conducted). While a phased approach to research may postpone the time when a conclusive decision can be reached it does help to conserve resources such as those that may be consumed in launching a large multicenter study when a smaller study may be sufficient. Investigators will need to engage stakeholders to prioritize what stage of research may be most useful for the practical range of decisions that will be made.

Investigators should discuss in the protocol what stage of knowledge the current study will fulfill in light of the actions available to different stakeholders. This will allow reviewers of the protocol to assess the degree to which the evidence generated in the study holds the potential to fill specific knowledge gaps. For studies that are described in the protocol as preliminary, this may also help readers understand other tradeoffs that were made in the design of the study, in terms of methodological limitations that were accepted a priori in order to gather preliminary information about the research questions.

Defining and Refining Study Questions Using PICOTS Framework

As recommended in other AHRQ methods guides,7 investigators should engage stakeholders in a dialogue in order to understand the objectives of the research in practical terms, particularly so that investigators know the types of decisions that the research may affect. In working with stakeholders to develop research questions that can be studied with scientific methods, investigators may ask stakeholders to identify six key components of the research questions that will form the basis for designing the study. These components are reflected in the PICOTS typology and are shown below in Table 1.2. These components represent the critical elements that will help investigators design a study that will be able to address the stakeholders' needs. Additional references that expand upon how to frame research questions can be found in the literature.8-9

PICOTS typology for developing research questions.

The PICOTS typology outlines the key parts of the research questions that the study will be designed to address.10 As a new research protocol is developed these questions can be presented in preliminary form and refined as other steps in the process are implemented. After the preliminary questions are refined, investigators should examine the questions to make sure that they will meet the needs of the stakeholders. In addition, they should assess whether the questions can be answered within the timeframe allotted and with the resources that are available for the study.

Endpoints

Since stakeholders ultimately determine effectiveness, it is important for investigators to ensure that the study endpoints and outcomes will meet their needs. Stakeholders need to articulate to investigators the health outcomes that are most important for a particular stakeholder to make decisions about treatment or take other health care actions. The endpoints that stakeholders will use to determine effectiveness may vary considerably. Unlike efficacy trials, in which clinical endpoints and surrogate measures are frequently used to determine efficacy, effectiveness may need to be determined based on several measures, many of which are not biological. These endpoints may be categorized as clinical endpoints, patient-reported outcomes and quality of life, health resource utilization, and utility measures. Types of measures that could be used are mortality, morbidity and adverse effects, quality of life, costs, or multiple outcomes. Chapter 6 gives a more extensive discussion of potential outcome measures of effectiveness.

The reliability, validity, and accuracy of study instruments to validly measure the concepts they purport to measure will also need to be acceptable to stakeholders. For instance, if stakeholders are interested in quality of life as an outcome, but do not believe there is an adequate measure of quality of life, then measurement development may need to be done prior to study initiation or other measures will need to be identified by stakeholders.

Discussing Evidentiary Need and Uncertainty

Investigators and stakeholders should discuss the tradeoffs of different study designs that may be used for addressing the research questions. This dialogue will help researchers design a study that will be relevant and useful to the needs of stakeholders. All study designs have strengths and weaknesses, the latter of which may limit the conclusiveness of the final study results. Likewise, some decisions may require evidence that cannot be obtained from certain designs. In addition to design weaknesses, there are also practical tradeoffs that need to be considered in terms of research resources, like the time needed to complete the study, the availability of data, investigator expertise, subject recruitment, human subjects protection, research budget, difference to be detected, and lost-opportunity costs of doing the research instead of other studies that have priority for stakeholders. An important decision that will need to be made is whether or not randomization is needed for the questions being studied. There are several reasons why randomization might be needed, such as determining whether an FDA-approved drug can be used for a new use or indication that was not studied as part of the original drug approval process. A paper by Concato includes a thorough discussion of issues to consider when deciding whether randomization is necessary.11

In discussing the tradeoffs of different study designs, researchers and stakeholders may wish to discuss the principal goals of research and ensure that researchers and stakeholders are aligned in their understanding of what is meant by scientific evidence. Fundamentally, research is a systematic investigation that uses scientific methods to measure, collect, and analyze data for the advancement of knowledge. This advancement is through the independent peer review and publication of study results, which are collectively referred to as scientific evidence. One definition of scientific evidence has been proposed by Normand and McNeil12 as:

… the accumulation of information to support or refute a theory or hypothesis. … The idea is that assembling all the available information may reduce uncertainty about the effectiveness of the new technology compared to existing technologies in a setting where we believe particular relationships exist but are uncertain about their relevance …

While the primary aim of research is to produce new knowledge, the Normand and McNeil concept of evidence emphasizes that research helps create knowledge by reducing uncertainty about outcomes. However, rarely, if at all, does research eliminate all uncertainty around most decisions. In some cases, successful research will answer an important question and reduce uncertainty related to that question, but it may also increase uncertainty by leading to more, better informed questions regarding unknowns. As a result, nearly all decisions face some level of uncertainty even in a field where a body of research has been completed. This distinction is also critical because it helps to separate the research and subsequent actions that decisionmakers may take based on their assessment of the research results. Those subsequent actions may be informed by the research findings but will also be based on stakeholders' values and resources. Hence, as the definition by Normand and McNeil implies, research generates evidence but stakeholders decide whether to act on the evidence. Scientific evidence informs decisions to the extent it can adequately reduce the uncertainty about the problem for the stakeholder. Ultimately, treatment decisions are only guided by an assessment of the certainty that a course of therapy will lead to the outcomes of interest and the likelihood that this conclusion will be affected by the results of future studies.

In conceptualizing a study design, it is important for investigators to understand what constitutes sufficient and valid evidence from the stakeholder's perspective. In other words, what is the type of evidence that will be required to inform the stakeholder's decision to act or make a conscious decision not to take action? Evidence needed for action may vary by type of stakeholder and the scope of decisions that the stakeholder is making. For instance, a stakeholder who is making a population-based decision such as whether to provide insurance coverage for a new medical device with many alternatives may need substantially robust research findings in order to take action and provide that insurance coverage. In this example, the stakeholder may only accept as evidence a study with strong internal validity and generalizability (i.e., one conducted in a nationally representative sample of patients with the disease). On the other hand a patient who has a health condition where there are few treatments may be willing to accept lower-quality evidence in order to make a decision about whether to proceed with treatment despite a higher level of uncertainty about the outcome.

In many cases, there may exist a gradient of actions that can be taken based on available evidence. Quanstrum and Hayward13 have discussed this gradient and argued that health care decisionmaking is changing, partly because more information is available to patients and other stakeholders about treatment options. As shown in the upper panel (A) in Figure 1.1, many people may currently believe that health care treatment decisions are basically uniform for most people and under most circumstances. Panel A represents a hypothetical treatment whereby there is an evidentiary threshold or a point at which treatment is always beneficial and should be recommended. On the other hand below this threshold care provides no benefits and treatment should be discouraged. Quanstrum and Hayward argue that increasingly health care decisions are more like the lower panel (B). This panel portrays health care treatments as providing a large zone of discretion where benefits may be low or modest for most people. While above this zone treatment may always be recommended, individuals who fall within the zone may have questionable health benefits from treatment. As a result, different decisionmakers may take different actions based on their individual preferences.

Conceptualization of clinical decisionmaking. See Quanstrum KH, Hayward RA (Reference #). This figure is copyrighted by the Massachusetts Medical Society and reprinted with permission.

In light of this illustration, the following questions are suggested for discussion with stakeholders to help elicit the amount of uncertainty that is acceptable so that the study design can reach an appropriate level of evidence for the decision at hand:

What level of new scientific evidence does the decisionmaker need to make a decision or take action?

What quality of evidence is needed for the decisionmaker to act?

What level of certainty of the outcome is needed by the decisionmaker(s)?

How specific does the evidence need to be?

Will decisions require consensus of multiple parties?

Additional Considerations When Considering Evidentiary Needs

As mentioned earlier, different stakeholders may disagree on the usefulness of different research designs, but it should be pointed out that this disagreement may be because stakeholders have different scopes of decisions to make. For example, high-quality research that is conclusive may be needed to make a decision that will affect the entire nation. On the other hand, results with more uncertainty as to the magnitude of the effect estimate(s) may be acceptable in making some decisions such as those affecting fewer people or where the risks to health are low. Often this disagreement occurs when different stakeholders debate whether evidence is needed from a new randomized controlled trial or whether evidence can be obtained from an analysis of an existing database. In this debate, both sides need to clarify whether they are facing the same decision or the decisions are different, particularly in terms of their scope.

Groups committed to evidence-based decisionmaking recognize that scientific evidence is only one component of the process of making decisions. Evidence generation is the goal of research, but evidence alone is not the only facet of evidence-based decisionmaking. In addition to scientific evidence, decisionmaking involves the consideration of (a) values, particularly the values placed on benefits and harms, and (b) resources.14 Stakeholder differences in values and resources may mean that different decisions are made based on the same scientific evidence. Moreover, differences in values may create conflict in the decisionmaking process. One stakeholder may believe a particular study outcome is most important from their perspective, while another stakeholder may believe a different outcome is the most important for determining effectiveness.

Likewise, there may be inherent conflicts in values between individual decisionmaking and population decisionmaking, even though these decisions are often interrelated. For example, an individual may have a higher tolerance for treatment risk in light of the expected treatment benefits for him or her. On the other hand a regulatory health authority may determine that the population risk is too great without sufficient evidence that treatment provides benefits to the population. An example of this difference in perspective can be seen with how different decisionmakers responded to evidence about the drug Avastin® (bevacizumab) for the treatment of metastatic breast cancer. In this case, the FDA revoked their approval of the breast cancer indication for Avastin after concluding that the drug had not been shown to be safe and effective for that use. Nonetheless, Medicare, the public insurance program for the elderly and disabled continued to allow coverage when a physician prescribes the drug, even for breast cancer. Likewise, some patient groups were reported to be concerned by the decision since it presumably would deny some women access to Avastin treatment. For a more thorough discussion of these issues around differences in perspective, the reader is referred to an article by Atkins15 and the examples in Table 1.3 below.

Examples of individual versus population decisions (Adapted from Atkins, 2007).

Specifying Magnitude of Effect

In order for decisions to be objective, it is important for there to be an a priori discussion with stakeholders about the magnitude of effect that stakeholders believe represents a meaningful difference between treatment options. Researchers will be familiar with the basic tenet that statistically significant differences do not always represent clinically meaningful differences. Hence, researchers and stakeholders will need to have knowledge of the instruments that are used to measure differences and the accuracy, limitations, and properties of those instruments. Three key questions are recommended to use when eliciting from stakeholders the effect sizes that are important to them for making a decision or taking action:

How do patients and other stakeholders define a meaningful difference between interventions?

How do previous studies and reviews define a meaningful difference?

Are patients and other stakeholders interested in superiority or noninferiority as it relates to decisionmaking?

Challenges to Developing Study Questions and Initial Solutions

In developing CER study objectives and questions, there are some potential challenges that face researchers and stakeholders. The involvement of patients and other stakeholders in determining study objectives and questions is a relatively new paradigm, but one that is consistent with established principles of translational research. A key principle of translational research is that users need to be involved in research at the earliest stages for the research to be adopted.16 In addition, most research is currently initiated by an investigator, and traditionally there have been few incentives (and some disincentives) to involving others in designing a new research study. Although the research paradigm is rapidly shifting,17 there is little information about how to structure, process, and evaluate outcomes from initiatives that attempt to engage stakeholders in developing study questions and objectives with researchers. As different approaches are taken to involve stakeholders in the research process, researchers will learn how to optimize the process of stakeholder involvement and improve the applicability of research to the end-users.

The bringing together of stakeholders may create some general challenges to the research team. For instance, it may be difficult to identify, engage, or manage all stakeholders who are interested in developing and using scientific evidence for addressing a problem. A process that allows for public commenting on research protocols through Internet postings may be helpful in reaching the widest network of interested stakeholders. Nevertheless, finding stakeholders who can represent all perspectives may not always be practical or available to the study team. In addition, competing interests among stakeholders may make prioritization of research questions challenging. Different stakeholders have different needs and this may make prioritization of research difficult. Nonetheless, as the science of translational research evolves, the collaboration of researchers with stakeholders will likely become increasingly the standard of practice in designing new research.

To assist researchers and stakeholders with working together, AHRQ has published several online resources to facilitate the involvement of stakeholders in the research process. These include a brief guide for stakeholders that highlights opportunities for taking part in AHRQ's Effective Health Care Program, a facilitation primer with strategies for working with diverse stakeholder groups, a table of suggested tasks for researchers to involve stakeholders in the identification and prioritization of future research, and learning modules with slide presentations on engaging stakeholders in the Effective Health Care Program.18-19 In addition, AHRQ supports the Evidence-based Practice Centers in working with various stakeholders to further develop and prioritize decisionmakers' future research needs, which are published in a series of reports on AHRQ's Web site and on the National Library of Medicine's open-access Bookshelf.20

Likewise, AHRQ supports the active involvement of patients and other stakeholders in the AHRQ DEcIDE program, in which different models of engagement have been used. These models include hosting in-person meetings with stakeholders to create research agendas;21-22 developing research based on questions posed by public payers such as Centers for Medicare and Medicaid Services; addressing knowledge gaps that have been identified in AHRQ systematic reviews through new research; and supporting five research consortia, each of which involves researchers, patients, and other stakeholders working together to develop, prioritize, and implement research studies.

Summary and Conclusion

This chapter provides a framework for formulating study objectives and questions, for a research protocol on a CER topic. Implementation of the framework involves collaboration between researchers and stakeholders in conceptualizing the research objectives and questions and the design of the study. In this process, there is a shared commitment to protect the integrity of the research results from bias and conflicts of interest, so that the results are valid for informing decisions and health care actions. Due to the complexity of some health care decisions, the evidence needed for decisionmaking or action may need to be developed from multiple studies, including preliminary research that becomes the underpinning for larger studies. The principles described in this chapter are intended to strengthen the writing of research protocols and enhance the results from the emanating studies, for informing the important decisions facing patients, providers, and other stakeholders about health care treatments and new technologies. Subsequent chapters in this User's Guide provide specific principles for operationalizing the study objectives and research questions in writing a complete study protocol that can be executed as new research.

Checklist: Guidance and key considerations for developing study objectives and questions for observational CER protocols.

References

- 1.

Committee on Comparative Effectiveness Research Prioritization, Institute of Medicine. Initial National Priorities for Comparative Effectiveness Research. Washington, DC: The National Academies Press; 2009.

- 2.

- 3.

- 4.

Heckman JJ. Econometric causality. Int Statist Rev. 2008;76:1–27.

- 5.

- 6.

Ramsey SD, Sullivan SD. Evidence, economics, and emphysema: Medicare's long journey with lung volume reduction surgery. Health Aff (Millwood). 2005 Jan-Feb;24(1):55–66. [

PubMed]

- 7.

Methods Guide for Effectiveness and Comparative Effectiveness Reviews. Rockville, MD: Agency for Healthcare Research and Quality; Aug, 2011. AHRQ Publication No. 10(11)-EHC063-EF. Chapters available at

www.effectivehealthcare.ahrq.gov.

- 8.

Parfrey P, Ravani P. On framing the research question and choosing the appropriate research design. Methods Mol Biol. 2009;473:1–17. [

PubMed]

- 9.

Thabane L, Thomas T, Ye C, et al. Posing the research question: not so simple. Can J Anaesth. 2009 Jan;56(1):71–9. [

PubMed]

- 10.

Richardson WS, Wilson MC, Nishikawa J, et al. The well-built clinical question: a key to evidence-based decisions. ACP J Club. 1995 Nov-Dec;123(3):A12–3. [

PubMed]

- 11.

Concato J. When to randomize, or ‘Evidence-based medicine needs medicine-based Evidence.’ Pharmacoepidemiol Drug Saf. 2012 May;21 Suppl 2:6–12. [

PubMed]

- 12.

- 13.

Quanstrum KH, Hayward RA. Lessons from the mammography wars. N Engl J Med. 2010 Sep 9;363(11):1076–9. [

PubMed]

- 14.

Muir Gray JA. Evidence-Based Healthcare and Public Health. 3rd ed. Churchill Livingstone; 1997.

- 15.

Atkins D. Creating and synthesizing evidence with decision makers in mind: integrating evidence from clinical trials and other study designs. Med Care. 2007 Oct;45(10 Suppl 2):S16–22. [

PubMed]

- 16.

Rogers E. Diffusions of Innovations. 5th ed. New York, NY: Free Press; 2003.

- 17.

Anonymous. Translational research and experimental medicine in 2012. Lancet. 2012 Jan 7;379(9810):1. [

PubMed]

- 18.

- 19.

- 20.

- 21.

Pickard AS, Lee TA, Solem CT, et al. Prioritizing comparative-effectiveness research topics via stakeholder involvement: an application in COPD. Clin Pharmacol Ther. 2011 Dec;90(6):888–92. [

PubMed]

- 22.

Gliklich RE, Leavy MB, Velentgas P, et al. Identification of Future Research Needs in the Comparative Management of Uterine Fibroid Disease: A Report on the Priority-Setting Process, Preliminary Data Analysis, and Research Plan, Effective Healthcare Research Report No. 31. Rockville, MD: Agency for Healthcare Research and Quality; Mar, 2011. (Prepared by the Outcome DEcIDE Center, under Contract No. HHSA 290-2005-0035-I, TO5). AHRQ Publication No. 11- EHC023-EF.

http://effectivehealthcare.ahrq.gov/reports/final.cfm.